In today’s digital age, conflicts and wars have increasingly spilled over into the online sphere, amplifying the spread of disinformation and hate speech. Social media platforms have become mirrors—and magnifiers—of real-world tensions, serving both as battlegrounds and breeding grounds for digital hostility. While individual users may spontaneously promote content aligned with their political or ideological beliefs, states and non-state actors often engage in coordinated campaigns to deliberately spread false or misleading information with the intention of deceiving, manipulating, or harming. This intentional manipulation of information is referred to as disinformation—the strategic dissemination of falsehoods intended to mislead populations, damage reputations, and sow division.[1]

Concurrently, hate speech proliferates across these platforms, often incited or legitimized by political elites and power structures. It manifests as communication that expresses or incites hatred against individuals or groups based on intrinsic characteristics such as ethnicity, religion, nationality, or gender.[2] In times of conflict, hate speech is frequently weaponized to unify populations against a common enemy, justify violence, or intimidate dissenting voices.

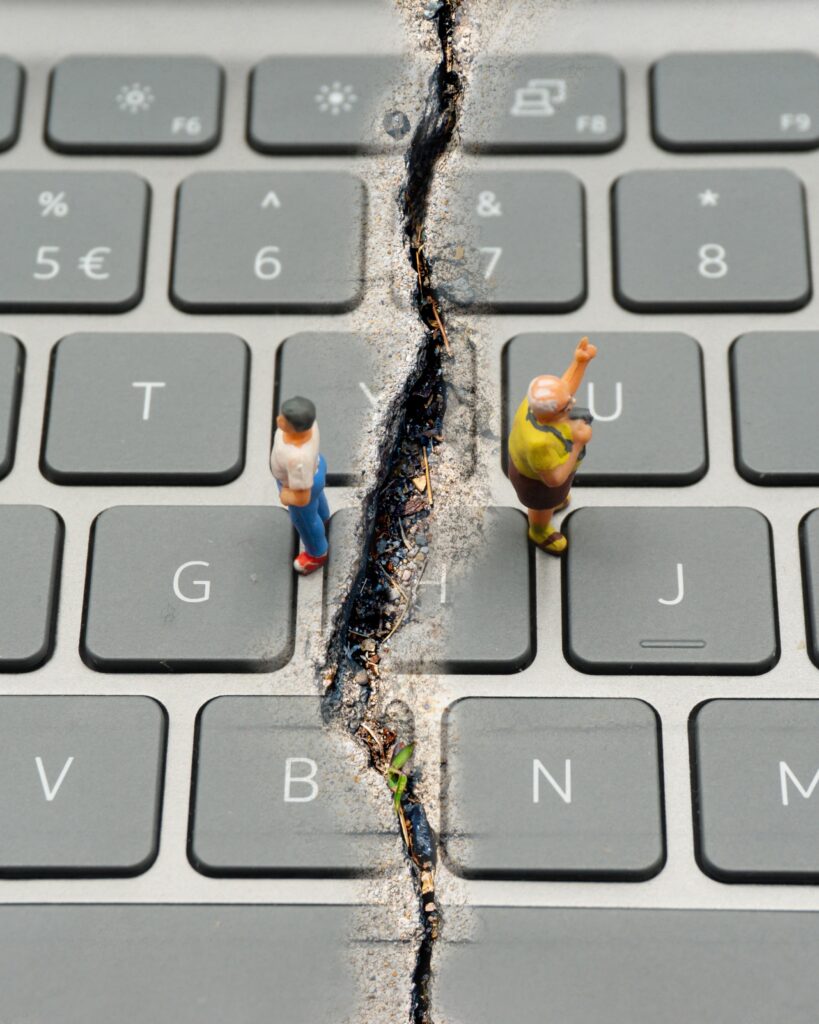

At the heart of this digital landscape lies polarization—a condition in which societies are fractured into opposing camps with rigid worldviews. Polarization intensifies as individuals become increasingly entrenched in supporting one side while wholly rejecting or dehumanizing the opposing side. This triad of disinformation, hate speech, and polarization does not merely reflect existing tensions; it actively fuels them. Together, they form a self-perpetuating cycle that deepens societal divisions, escalates hostility, and sustains conflict.[3]

This triad of disinformation, hate speech, and polarization form a self-perpetuating cycle that deepens societal divisions, escalates hostility, and sustains conflict

The Spiral of Polarization in War

Empirical studies across numerous conflict zones reveal a recurring pattern: disinformation and hate speech surge during times of heightened political tension and erupt when conflicts escalate into violence. In such moments, social media becomes an essential tool for competing narratives. Warring parties exploit these platforms to mobilize support, justify military action, discredit opponents, and manipulate public sentiment. Disinformation campaigns often seek to undermine the enemy’s morale, instill fear, or destabilize internal cohesion. These digital campaigns are not confined to cyberspace; rather, they have tangible, often deadly, consequences. The United Nations, for instance, concluded that Facebook played a “determining role” in fueling the genocide against the Rohingya in Myanmar. Online hate did not remain virtual—it catalyzed real-world violence.[4]

Disinformation in conflict settings constitutes a sophisticated ecosystem designed not just to confuse, but to control. It distorts truth, minimizes suffering, erodes empathy, and deepens societal divides. At its core, it is an emotional and psychological weapon—an essential component of modern information warfare. This weaponization of digital spaces is evident in numerous ongoing conflicts.

In Palestine, disinformation campaigns have contributed to the vilification of victims, the denial of documented atrocities, and the legitimization of targeting journalists and humanitarian workers. Narratives portraying children and survivors as “crisis actors” or staging their suffering serve to delegitimize real experiences and shield perpetrators from accountability. These strategies not only deny justice to victims but also manipulate global public opinion and shape policy discourse. While some elements may emerge spontaneously, especially during crises, the dominant thrust of such campaigns is deliberate and organized. Through coordinated networks, conflicting parties craft and disseminate content aimed at engineering consent, shifting blame, and constructing binary worldviews that leave little room for complexity, empathy, or dialogue.[5]

Disinformation is an emotional and psychological weapon; in conflict settings it distorts truth, minimizes suffering, erodes empathy, and deepens societal divides

ًWhile it’s supposed to be true that those forms of content exacerbate during conflict, hate speech and disinformation are often rooted in broader societal narratives—be they nationalistic, religious, or ideological. These narratives, ingrained over time, re-emerge in digital discourse as violent or misleading content. Political actors frequently amplify these stories, framing their own side as morally righteous and aggrieved. While these narratives may simplify complex realities into binary oppositions of good versus evil, social media’s algorithms intensify this reductionist thinking by privileging emotionally charged content and discouraging nuance. This environment is fertile ground for the spread of misinformation and incitement.[6]

Hate speech and disinformation among other violent content forms are the direct result of toxic polarization which goes beyond normal ideological disagreement and arises when individuals develop deep contempt for those with opposing beliefs while expressing intense loyalty and attachment to their own group’s views. This form of polarization transforms political or ideological differences into identity-based divisions, fostering the perception that the opposing side is not just wrong, but an irreconcilable enemy. Psychological research identifies three core drivers of this dynamic: dehumanization, dislike, and disagreement. When group members believe that the other side fundamentally dislikes, dehumanizes, or opposes them, polarization intensifies.[7]

This is exactly what happened in the case of the genocide going on in Gaza. Since the Israeli war against Gaza broke on October 7, 2023, a huge disinformation war has swept digital spaces, with many describing it as unprecedented. Israel launched a comprehensive disinformation and influence campaign designed to justify its military assault on Gaza and delegitimize Palestinian rights. Central to this effort was a $2 million covert operation funded by Israel’s Ministry of Diaspora Affairs, which used AI-generated content and bot farms to manipulate public opinion and promote dehumanizing narratives about Palestinians. This campaign strategically targeted U.S. lawmakers, especially Democrats, through platforms like Facebook, Instagram, and X, with tailored propaganda produced by the Israeli firm STOIC. OpenAI later disrupted some of these efforts, revealing a sophisticated operation aimed at amplifying Islamophobic and anti-Palestinian sentiment across Western digital spaces.

The war on Gaza has unequivocally demonstrated how offline conflicts can dramatically intensify online violence and disinformation, and can fuel false and harmful narratives

Simultaneously, the Israeli Ministry of Foreign Affairs launched a graphic and emotionally manipulative YouTube ad campaign across Europe and North America, designed to stir support for Israel’s military actions. According to 7amleh’s analysis, these ads, which included disturbing imagery and appeals framed in child-centered narratives, violated platform standards yet remained active. Additionally, people affiliated with Israel reached out to influencers with offers of payment and “briefing sessions” to distribute pro-Israel messaging on social media, further embedding state-sponsored narratives into grassroots digital spaces. This orchestrated manipulation highlights the asymmetric digital warfare in which Israel leverages advanced tools and vast resources to dominate discourse and suppress Palestinian voices.[8]

The war on Gaza has unequivocally demonstrated how offline conflicts can dramatically intensify online violence and disinformation. The existence of an entire Wikipedia page dedicated to tracking the vast amount of disinformation unleashed during the genocide underscores the scale of this phenomenon. It highlights not only how the offline conflict fueled a surge in false and harmful narratives online, but also how much of this disinformation was systematically driven by political actors—beyond the unconscious spread of misinformation by ordinary users.[9]

At the same time, Israel’s opponents—such as Iran—have intensified disinformation efforts, especially during the July 2025 flare-up following Israel’s offensive. A coordinated Iranian campaign using over 100 bot accounts on X (formerly Twitter) posted more than 240,000 times, aiming to sway U.S. public opinion and deter potential strikes on Iran’s nuclear facilities. The operation promoted Iran’s Supreme Leader, spread false claims of Israeli military failures, and portrayed Israel as a terrorist state. Posts used inflammatory imagery and hashtags to maximize virality and undermine the U.S.–Israel alliance. Analysts view the campaign as part of Iran’s broader strategy to manipulate global discourse and preempt military action through psychological and informational warfare.[10]

The emotional nature of conflict-related messaging, marked by fear, anger, or grief, further heightens susceptibility to manipulation and confusion

Several factors contribute to the spread of disinformation and other polarizing forms of content during conflicts. These include a widespread lack of verification and critical reasoning when assessing received information, as well as a tendency to view content as credible simply because it originates from an in-group source. Disinformation is often perceived as factual from a specific worldview, particularly when it aligns with deeply rooted stereotypes or long-standing narratives.

Cognitive biases also play a central role: individuals tend to seek information that confirms their existing beliefs, avoid content that challenges those beliefs, and resist updating their views even when confronted with credible counterevidence. The emotional nature of conflict-related messaging—marked by fear, anger, or grief—further heightens susceptibility to manipulation and confusion. People may acknowledge that disinformation exists, yet believe that only others are vulnerable to it, reinforcing blind spots in their own judgment. The echo chamber effect deepens these dynamics by limiting exposure to alternative perspectives, while disinformation cloaked in the language of public, institutional, or scientific authority gains unwarranted legitimacy. In some cases, exposure to accurate information can paradoxically entrench belief in falsehoods, as individuals reinterpret or reject the truth in ways that reinforce their original convictions. Together, these dynamics create a fertile environment for disinformation to thrive and polarization to intensify.[11]

The Role of Social Media

Social media platforms have played a significant role in escalating conflict by incentivizing divisive and potentially violence-inducing speech, often amplifying content that fosters polarization and mass harassment through algorithms optimized for engagement metrics like shares, comments, and time spent. While platforms have primarily addressed violent conflict through reactive content moderation—responding to specific incidents or outbreaks—this approach fails to address the deeper, systemic design issues that fuel conflict dynamics long before violence erupts. Independent investigations, such as the one regarding Facebook’s role in Myanmar, have shown how platforms can facilitate the incitement of offline violence by fomenting division. Research supports a complex picture: social media correlates with both polarization and increased political knowledge, but the former—especially toxic polarization, where people demonize opposing groups—is a more dangerous prelude to violence than mere policy disagreements.[12]

Social media platforms have played a significant role in escalating conflict by incentivizing violence-inducing speech. Its impact is not confined to the digital realm, it operates within a reinforcing cycle, intensifying polarization and inciting offline violence

The NYU Stern report “Fueling the Fire: How Social Media Intensifies U.S. Political Polarization – And What Can Be Done About It” concludes that although social media is not the root cause of political polarization in the United States, it plays a critical role in intensifying affective polarization—deep-seated hostility and contempt between political groups—which in turn erodes democratic norms, weakens trust in institutions, and fuels real-world violence. The report highlights how engagement-based algorithms systematically amplify divisive content, and despite occasional internal measures, platforms have largely failed to regulate themselves.[13]

The violence offline and online works as a reinforcing cycle, through which any offline incident could fuel online violence and vice versa. A prominent example from Palestine illustrating the role of social media was documented by 7amleh. The organization analyzed the digital buildup to the February 2023 attack on the village of Huwara in the West Bank, where hundreds of Israeli settlers carried out a violent assault that resulted in the killing of one Palestinian, widespread property destruction, including the torching of crops and vehicles, attacks on homes, and the terrorizing of residents. In the months leading up to the attack, 7amleh identified over 15,000 pieces of violent Hebrew-language content on social media platforms that directly targeted the village and its inhabitants. This content served to delegitimize, smear, and dehumanize the local Palestinian population, portraying them in inhumane and threatening terms. Such digital incitement laid the groundwork for real-world violence by normalizing hostility and justifying aggression against the community.[14]

From Division to Dialogue: Reversing Polarization

A lot can be done to undo prejudice and polarization; one of the hypotheses is simply communication. Contact between groups can have a significant impact between groups or conflicting parties, but only if it’s done properly. Sometimes, contact can worsen polarization. For example, following opponents on X might consolidate one’s own extreme ideas or prejudice against the others. If the contact is done in a sustainable way, with respectful exchange of ideas among people of the same rank or age, for example, that could reduce polarization. Intervention can be done by governments, Civil Society Organizations, media, social media platforms and other actors to spread the perspectives of others. Listening to stories from the perspective of others could help reduce much of the prejudice and polarization. However, social media has done more harm than good by allowing people to see and engage with similar people or narratives rather than opening them to other perspectives.[15]

Listening to stories from the perspective of others could help reduce much of the prejudice and polarization

To counter this, initiatives like Beyond Conflict recommend public awareness campaigns to expose the widespread misperceptions partisans hold about each other and call for holding influential figures accountable when they spread misinformation. Practical strategies to mitigate polarization include fostering intergroup dialogue, encouraging empathy through perspective-taking, and highlighting internal disagreements within political groups to disrupt rigid “us vs. them” narratives. Additionally, promoting kindness on social media can help reduce the normalization of dehumanization, while avoiding the repetition of misinformation and refraining from prejudiced jokes can weaken the social acceptability of divisive rhetoric.[16]

Other approaches advocate for a shift from reactive moderation to proactive, long-term, and scalable interventions rooted in platform design. The proposal is that platforms move away from engagement-based content ranking in sensitive contexts, limit mass dissemination capabilities, and introduce design features that foster meaningful, connecting interactions. Platforms should also integrate support for peacebuilding efforts, acknowledging that peace is not just the absence of violence but the presence of social conditions where all communities can flourish. Ultimately, conflict escalates through reinforcing cycles, and breaking this spiral requires disrupting the incentives that reward division and manipulation online.[17]

Other recommendations call for comprehensive structural reforms, urging social media platforms to transparently redesign their algorithmic systems to reduce the spread of inflammatory content. For example, the NYU Stern report underscores the importance of investing in robust, in-house content moderation teams and fostering deeper collaboration with civil society organizations. It also highlights the critical role of government intervention—advocating for legislation that mandates transparency, empowers regulatory agencies to enforce conduct standards, and supports the development of alternative digital platforms that promote democratic engagement. Ultimately, the report frames unchecked polarization driven by social media as a direct threat to democratic stability, one that demands urgent, coordinated action across sectors.[18]

Conclusion

Social media platforms and digital tools play a significant and often detrimental role in fueling violence, division, and polarization—both in times of peace and, more acutely, during war. Conflicting parties actively exploit these platforms to amplify their narratives, frequently resorting to disinformation and hate speech as strategic tools to delegitimize the other side. Social media offers a cost-effective and far-reaching means to disseminate such content, making it a powerful instrument in modern information warfare. Crucially, the impact of this content is not confined to the digital realm. It operates within a reinforcing cycle—intensifying polarization, deepening societal fractures, and ultimately inciting offline violence. This dynamic underscore the urgent need for platform accountability and a fundamental rethinking of how digital infrastructures intersect with conflict dynamics.

[1] Stavros, A., S. Phalen, S. Almakki, M. Nacionales-Tafoya, & R. A. García. 2023. “Disinformation in Conflict Environments in Asia.” Gerald R. Ford School of Public Policy, University of Michigan.

[2] 7amleh (Arab Center for the Advancement of Social Media) 2022. “A Guide to Combating Online Hate Speech.”

[3] Polarization Research Lab. 2022. “How Do You Study and Reverse Political Animosity? These Researchers Are Working to Answer That Question.” Charles Koch Foundation.

[4] Stavros et al. 2023

[5] Barforoush, S., & S. Plaut. 2024. “Information Disorder in Times of Conflict.” Canadian Museum for Human Rights.

[6] Cobb, S., S. Kaplan, A. Marc, & G. Milante. 2021. “The Role of Narrative in Managing Conflict and Supporting Peace.” Discussion paper, IFIT, Institute for Integrated Transitions, Barcelona.

[7] Psico‑smart Editorial Team. 2024. “The Role of Technology in Conflict Mediation: Exploring Digital Tools and Platforms.” Psico-smart Blog..

[8] Qadi, A. 2025. “From Bot Farms to Censorship: Israel’s Disinformation Warfare against Palestinians.” Palestine Chronicle.

[9] “Misinformation in the Gaza War.” 2025.

[10] Kahana, A. 2025. “Iran Deployed Bots to Post 240K Times to Block US Strikes on Nuclear Facilities.” NYP.

[11] Lewandowski, P. 2024. “Psychological Mechanisms of Disinformation and Their Impact on Social Polarization.” Studia Polityczne 2: 85–104. Łukasiewicz Research Network – ITECH Institute of Innovation and Technology.

[12] Stray, J., R. Iyer, & H. Puig Larrauri. 2023. “The Algorithmic Management of Polarization and Violence on Social Media.” Knight First Amendment Institute.

[13] Barrett, P. M., J. Hendrix, & J. G. Sims. 2021. “Fueling the Fire: How Social Media Intensifies U.S. Political Polarization and What Can Be Done about It.” Center for Business and Human Rights, Stern School of Business, New York University.

[14] 7amleh (Arab Center for the Advancement of Social Media). 2023. “An Analysis of the Israeli Inciteful Speech against the Village of Huwara on Twitter.”

[15] Moskalenko, S. 2023. “What Are the Solutions to Political Polarization?” Greater Good Magazine, Greater Good Science Center, University of California.

[16] Psico‑smart Editorial Team. 2024. “The Role of Technology in Conflict Mediation: Exploring Digital Tools and Platforms.” Psico-smart Blog.

[17] Stray, J., R. Iyer, & H. Puig Larrauri. 2023. “The Algorithmic Management of Polarization and Violence on Social Media.” Knight First Amendment Institute

[18] Barrett, P. M., J. Hendrix, & J. G. Sims. 2021. “Fueling the Fire: How Social Media Intensifies U.S. Political Polarization and What Can Be Done about It.” Center for Business and Human Rights, Stern School of Business, New York University.

Photography

Visual metaphor about disinformation and polarization in the digital environment. Author: Evan Huang (Shutterstock).